this post was submitted on 07 Jan 2025

645 points (100.0% liked)

linuxmemes

25853 readers

1110 users here now

Hint: :q!

Sister communities:

Community rules (click to expand)

1. Follow the site-wide rules

- Instance-wide TOS: https://legal.lemmy.world/tos/

- Lemmy code of conduct: https://join-lemmy.org/docs/code_of_conduct.html

2. Be civil

- Understand the difference between a joke and an insult.

- Do not harrass or attack users for any reason. This includes using blanket terms, like "every user of thing".

- Don't get baited into back-and-forth insults. We are not animals.

- Leave remarks of "peasantry" to the PCMR community. If you dislike an OS/service/application, attack the thing you dislike, not the individuals who use it. Some people may not have a choice.

- Bigotry will not be tolerated.

3. Post Linux-related content

- Including Unix and BSD.

- Non-Linux content is acceptable as long as it makes a reference to Linux. For example, the poorly made mockery of

sudoin Windows. - No porn, no politics, no trolling or ragebaiting.

4. No recent reposts

- Everybody uses Arch btw, can't quit Vim, <loves/tolerates/hates> systemd, and wants to interject for a moment. You can stop now.

5. 🇬🇧 Language/язык/Sprache

- This is primarily an English-speaking community. 🇬🇧🇦🇺🇺🇸

- Comments written in other languages are allowed.

- The substance of a post should be comprehensible for people who only speak English.

- Titles and post bodies written in other languages will be allowed, but only as long as the above rule is observed.

6. (NEW!) Regarding public figures

We all have our opinions, and certain public figures can be divisive. Keep in mind that this is a community for memes and light-hearted fun, not for airing grievances or leveling accusations. - Keep discussions polite and free of disparagement.

- We are never in possession of all of the facts. Defamatory comments will not be tolerated.

- Discussions that get too heated will be locked and offending comments removed.

Please report posts and comments that break these rules!

Important: never execute code or follow advice that you don't understand or can't verify, especially here. The word of the day is credibility. This is a meme community -- even the most helpful comments might just be shitposts that can damage your system. Be aware, be smart, don't remove France.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

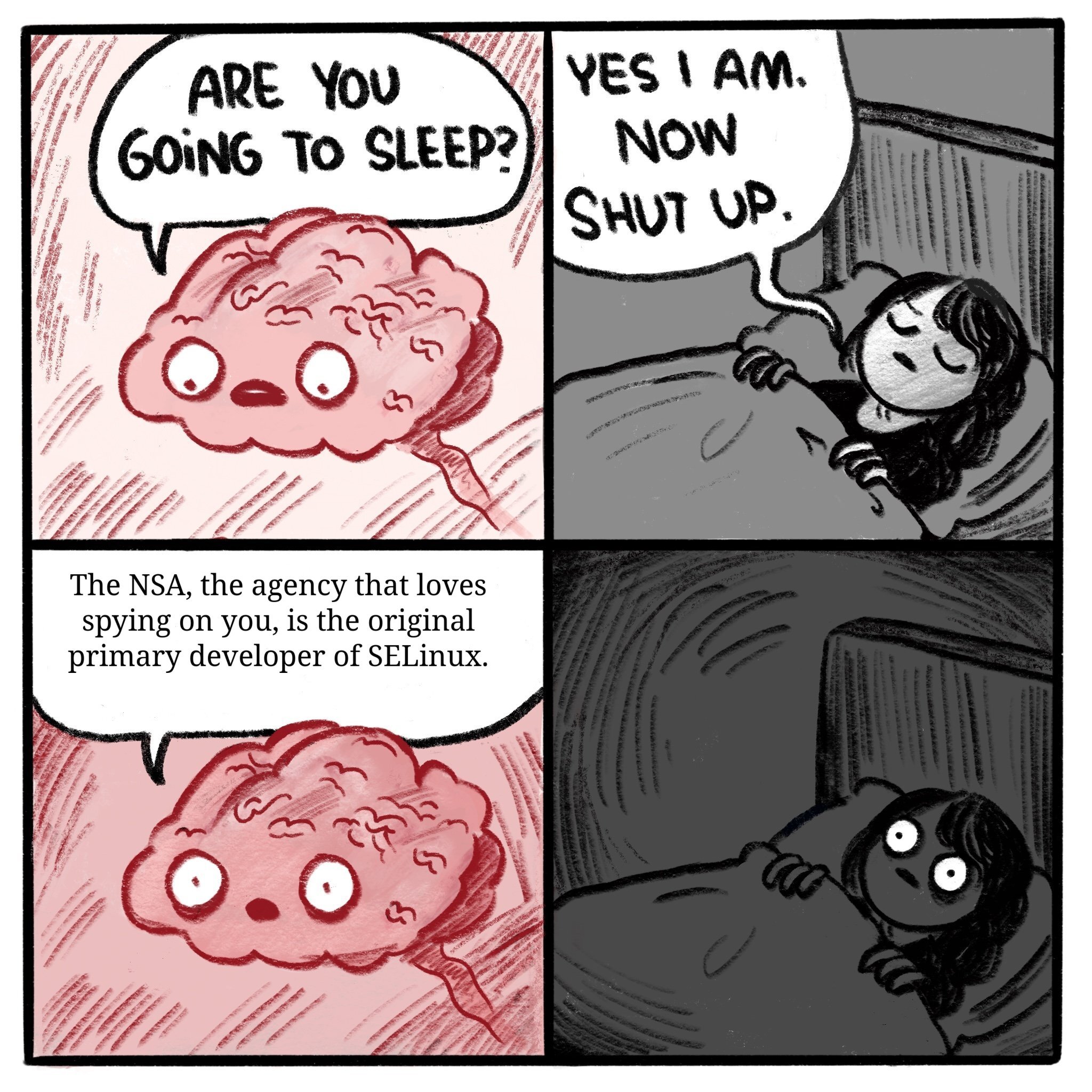

I'm not sure why that's a problem. The NSA needed strong security so they created a project to serve the need. They are no longer in charge of SELinux but I wouldn't be surprised if they still worked on it occasionally.

There are a lot of reasons to not like the NSA but SELinux is not one of them.

That's the trubble with the NSA. They want to spy on people, but they also need to protect American companies from foreign spies. When you use their stuff, it's hard to be sure which part of the NSA was involved, or if both were in some way.

The NSA has a fairly specific pattern of behavior. They work in the shadows not in the open. If they target things with low visibility so it is hard to trace. Backdooring SELinux would be uncharacteristic and silly. They target things like hardware supply chains and ISPs. There operations aren't even that covert as they work with companies.

The specific example I'm thinking of is DES. They messed with the S-boxes, and nobody at the time knew why. The assumption was that they weakened them.

However, some years later, cryptographers working in public developed differential cryptanalysis to break ciphers. Turns out, those changed S-boxes made it difficult to apply differential cryptanalysis. So it appears they actually made it stronger.

But then there's this other wrinkle. They limited the key size to 56-bits, which even at the time was known to be too small. Computers would eventually catch up to that. Nation states would be able to break it, and eventually, well funded corporations would be able to break it. That time came in the 90s.

It appears they went both directions with that one. They gave themselves a window where they would be able to break it when few others could, including anything they had stored away over the decades.

Honestly I think it ultimately comes down to the size of the organization. Chances are the right hand doesn't know what the left hand is doing.

I do like the direction the US is heading it. Some top brass have finally caught on that you can't limit access to back doors.

They were a bit too public with "Dual_EC_DRBG", to the point where everybody just assumed it had a backdoor and avoided it, the NSA ended up having to pay people to use it.

So, how many backdoors do you think they implemented into the kernel?

None

There are always exploits to be used. Also there isn't a lot of use in kernel specific exploits