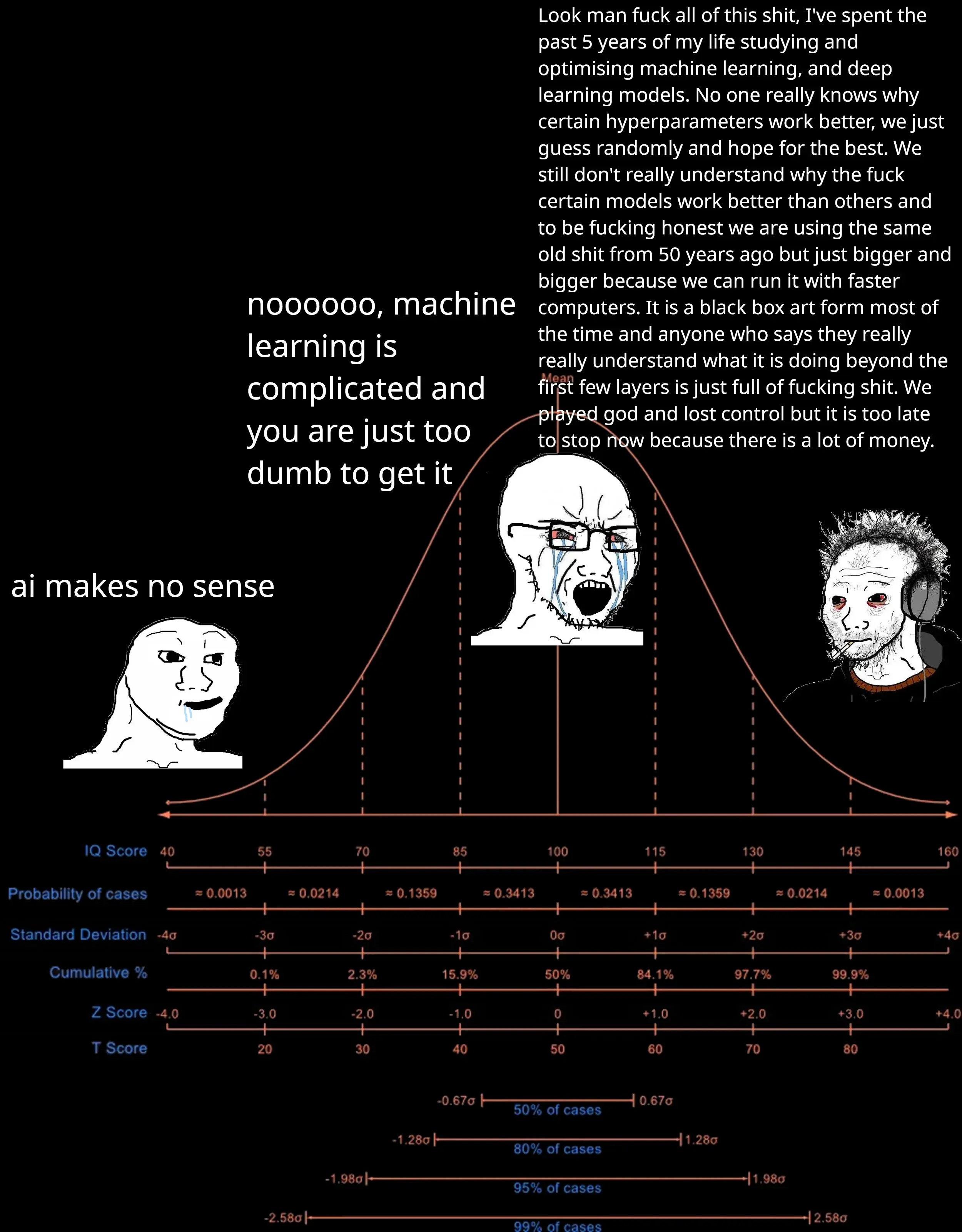

Dude on the right is correct that perturbed gradient descent with threshold functions and backprop feedback was implemented before most of us were born.

The current boom is an embarrassingly parallel task meeting an architecture designed to run that kind of task.