Every boomer seems like that: "You shouldn't trust anyone without fact-checking."

30 years later: "Let's trust every shoe salesman and ChatGPT, they are my new friends."

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

Every boomer seems like that: "You shouldn't trust anyone without fact-checking."

30 years later: "Let's trust every shoe salesman and ChatGPT, they are my new friends."

Is that not what ChatGPT was made for? Industrial scale misinformation?

I think the intention was to legalize plagiarism under the guise of helping humanity. Only if corporations do it of course.

and to make us plebes even dumber.

No, if he cited inaccurate information it was because he didn't check it. Same as if he cited something he heard from a guy on the bus.

I think there's some shared blame. Chatgpt existing and marketing itself as useful makes people believe it. If you have to double check everything it says, what is the point of using it in the first place? This isn't unsolicited information from someone you're chatting to that came up naturally and should be checked, this is something you have to specifically choose to use.

And Google throw it in your face and you have to be very careful about. For years when you Google something the first thing would be a snipped from a website, so if you Google "PayPal fee" it would show a snipped from a website mentioning a PayPal fee, but now the result in the same place and in the same style is a LLM response.

Yeah it's definitely a good reason to just ditch google altogether now.

LLMs are undoubtedly impressive tech that will get better with time. But to anyone singing their praises too emphatically I say ask it something on a topic you are an expert on; you’ll quickly see how fallible they currently are.

Problem is a lack of expertise with most people. Most people I interact with are generally oblivious to most things, including their careers lol.

Tbh if they game get them to ask it about that, it fails spectacularly badly, even worse than in general. TV shows and movies it's a bit better on, probably because there are so many episode summaries and reviews online, but if you talk to it long enough and ask varied and specific enough information it'll fail there too.

They may not be an expert at something, but if they have a specific interest or hobby that'll probably work.

LLM's are not AI. Calling it as such says more about the person than the technology. And then I realize, they might be right... /s

calling LLMs ai isnt wrong at all, it's just that sci-fi has made people think ai always means something as smart as a human. heck, the simple logic controlling the monsters in Minecraft is called ai.

Are you suggesting LLMs aren’t powering ChatGPT, the AI front in the post is about? Or am I just missing your joke (which is possible?)

It's sarcasm. And yes, a joke. Along the same lines as 'Think of your average person, and realize half the population is dumber'.

He didn't cite wrong information (only) because of ChatGPT, but because he lacks the instinct (or training, or knowledge) to verify the first result he either sees or likes.

If he had googled for the information and his first click was an article that was giving him the same false information, he would've probably insisted just the same.

LLMs sure make this worse, as much more information coming out of them is wrong, but the root cause is the same it's been before their prevalence. Coincidentally it's the reason misinformation campaigns work so well and are so easy.

Edit: removed distraction

Chatgpt is unfortunately fully capable of generating false information without ever being given it.

Googling could have also returned bad info. Lemmy has bad info. A newspaper could have reported bad info about paypal. Bad info isn't an AI problem.

The fact that chatgpt returned bad info means most of the internet has bad info about PayPal's rates.

Well, sure. But if you go the PayPal website you can see the correct information. Before Google's AI popped up at the top of the screen, the PayPal website would have. In this situation, Google is now prioritizing pushing the misinformation that their AI found from some outdated website instead of the official PayPal website that has the correct info. That's the issue.

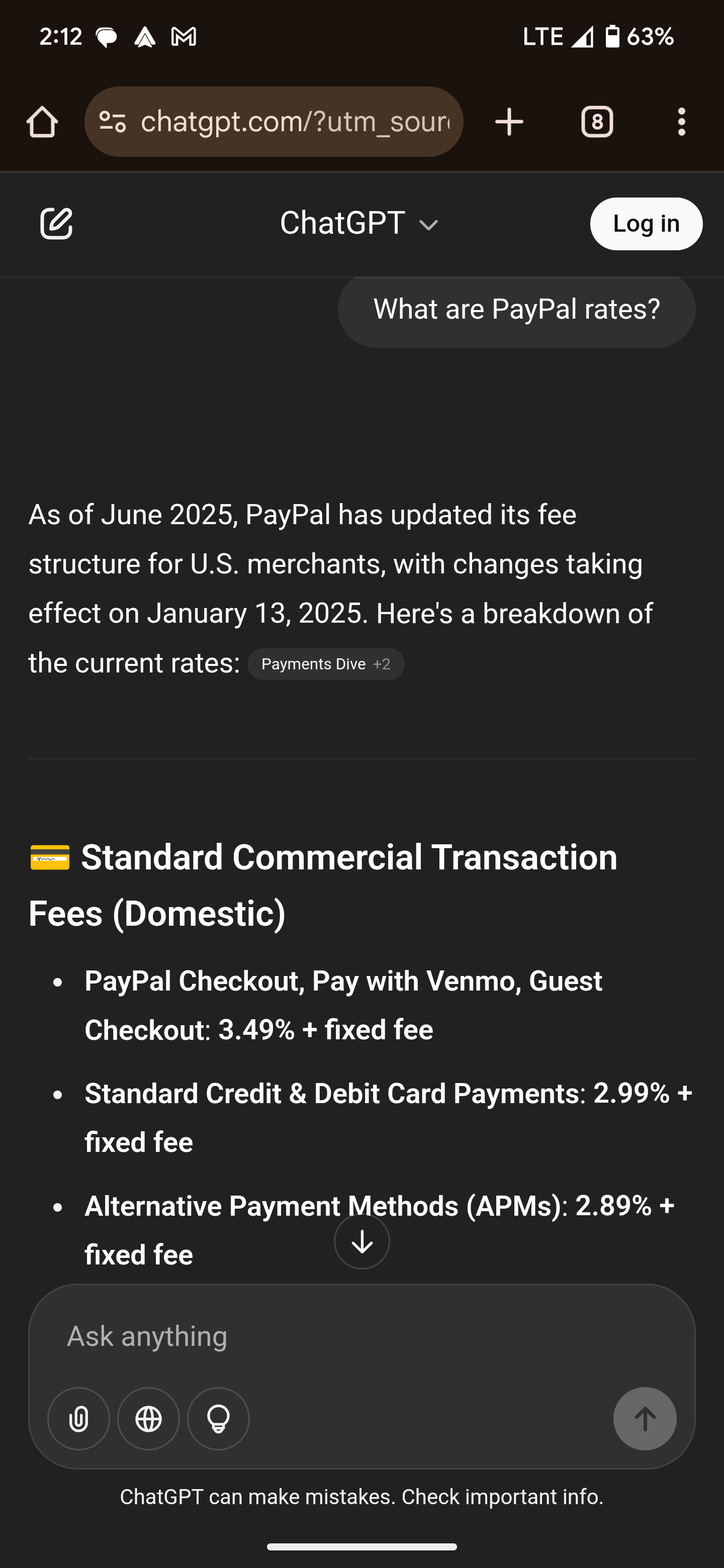

The OP said chatgpt. I just tried it:

And I thought it weird that OP said his dad asked chatgpt. Who uses chatgpt instead of Google for stuff like that?

This screenshot doesn't really prove anything but that's not how chatgpt works. It might have given you the right info and someone else the wrong info.

Even if they were static, deterministic things, which they aren't in the context of end user services like chatgpt, just giving two slightly different prompts could cause something like this to happen.

Ah, yea, sorry, my brain scrambled that. But same point really. Chatgpt doesn't always pull from the current official website for it's data either, so same problem. Chatgpt and Google are loudly marketing, "Hey you don't need to search for the info, our AI will give it to you," when the Ai is wrong a lot.

The problem is a lack of critical thinking skills. There is only one reliable way to get information about this and it's from the primary source.

?

Ask it if PayPal has a 5% fee? Sounds like he might have been arguing about it and tried to fact check himself and chafgpt told him what he wanted to hear maybe?

Why does everyone have to make up stories about AI? AI is bad enough without bullshit stories.

OP lies!!

The fee varies based on multiple factors, but international with currency conversion it can actually reach an estimated total of 5%.

So technically the information wasn't wrong either, it's most likely your dad asked the wrong question or didn't understand the answer.

Searching on DDG gives a similar answer btw.

It's not LLMs ruining his brain, he's just dumb on the topic.

This is happening to me more and more often. It's infuriating.

Is you dad my boss?